As today’s cameras deliver higher resolutions and frame rates and new systems architectures such as vision on the edge have bandwidth limitations, compression becomes a key challenge for advanced image processing applications. But how can the data throughput be reduced without jeopardizing the image and inspection quality?

A major trend in the machine vision industry in recent years has been the rise of new generation CMOS sensors delivering high resolution images at high framerates. Many early days inspection systems relied on cameras with resolutions below 1 Megapixel and 5 megapixels was considered a quite high resolution for machine vision applications.

Today, 12, 16, 20 or 25 Megapixel sensors such as Sony’s IMX54x devices have become common in the industry and you can find cameras with resolutions above 100 Megapixels for advanced applications.

Higher resolutions and sensor output at higher frame rates require higher interface bandwidth. This is why camera interfaces with higher bandwidth have been gaining ground in the machine vision industry in the last years: USB3 Vision, 5GigE, 10GigE, CoaXPress or Camera Link offer suitable bandwidth for demanding vision applications.

As a result, the host computer and the image processing software now must be able to handle huge amounts of data. This becomes even more critical with new types of vision system layouts: Machine vision systems were traditionally stand-alone machines on a factory floor using powerful PCs as a host and performing the whole processing locally. Today, vision is increasingly used in more mobile, outdoor applications, with embedded computers as a host and an internet connectivity for cloud or edge computing. Even if the high image data flow has been tackled by sensors and interfaces, it becomes a challenge on the host processing side. This calls for compression.

Trade-offs of compression

There are loss-less compression algorithms. They preserve the original image, but the compression ratio is typically around 2:1, which is insufficient for high-resolution, high-framerate applications. Developers of advanced imaging and vision systems are therefore facing the dilemma or trading off image quality for compression or compression for image quality.

Compression algorithms such as MPEG can reduce the volume of image data by a factor 10 and beyond. However, this comes at the cost of image quality. This may be acceptable for consumer applications and some industrial and scientific applications or to archive inspection records. However, for high-precision image processing applications, the loss in image quality, especially at the object’s edges, can jeopardize the performance and the total outcome of the image-processing software.

The generic video compression algorithms are focused on compressing movies with data source of YUV 422 or RGB, therefore the generic algorithms reduce the color resolution with 422 or 420 color reduction. They also take in account that the pixel resolution is very high and high frequency noise is acceptable and sometimes even recommended as it may have sharpness effect. In many of the new applications, zoom in or clear edges is required. In that case, the high frequency noise is problematic and even getting worse when using good zooming algorithms.

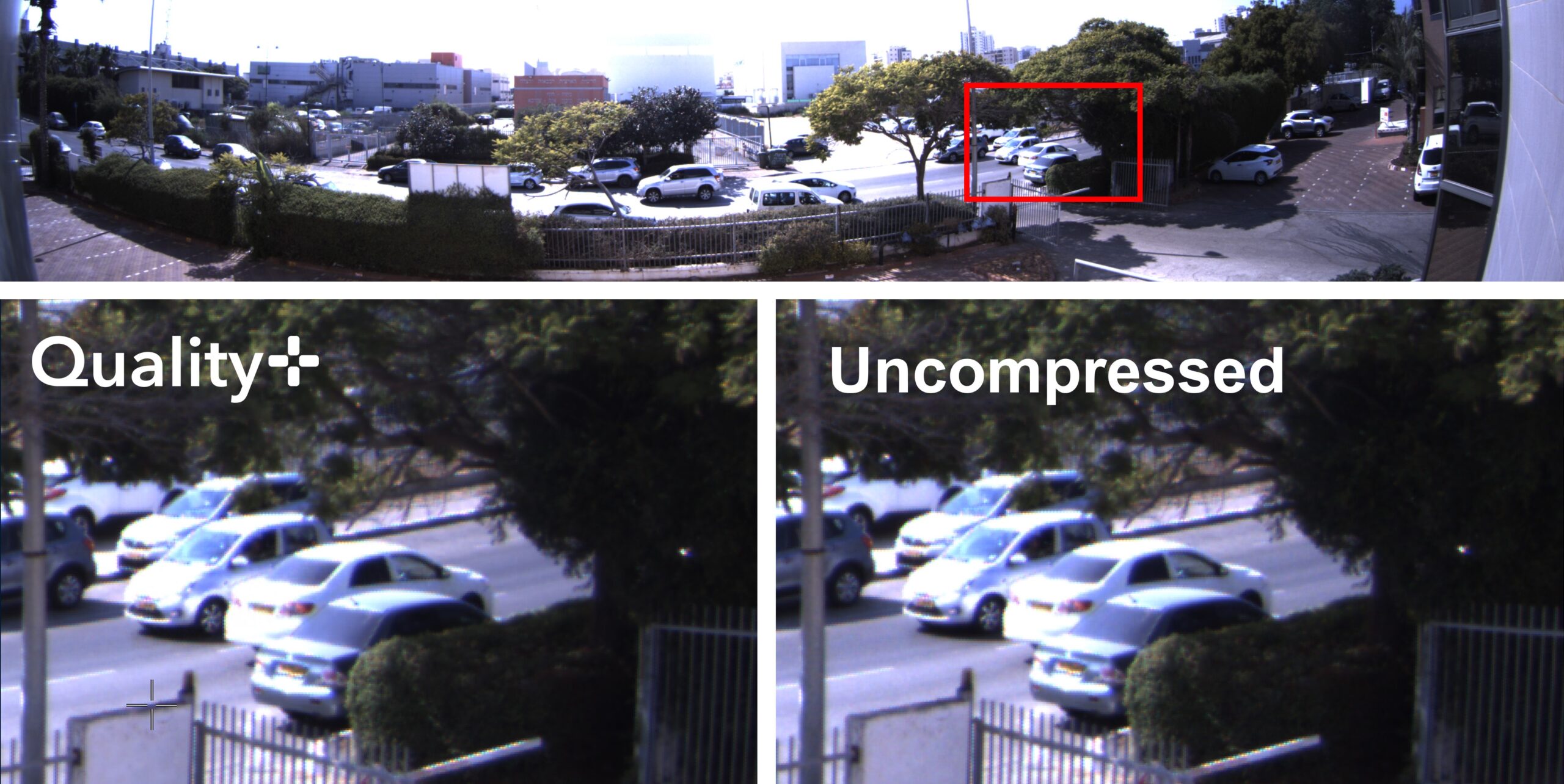

Gidel, an Israeli company specialized in FPGA development for vision applications, has a long track record in addressing this specific challenge. More than 25 years ago, the company had already patented Gidel Imaging, a software tool that enables zooming and enhancing of compressed images by reducing their high frequency noise. It was done by long research after algorithms that can predict what was the correct values of the DCT before the quantization. Now, with its Quality+ compression, Gidel have found a way to significantly reduce or eliminate this large noise in the first place.

Today, Gidel introduces Gidel Quality+ Compression, a new compression algorithm designed to address the challenges of today’s vision applications. Quality+ Compression provides a high compression ratio while maintaining a high image quality. It can process more than 1 Gigapixel per second per camera in real time on an FPGA with low power consumption, which makes it especially suitable for embedded computing.

Image compression at the acquisition stage

Gidel’s Quality+ Compression is designed also to support the need for compressing many cameras at line speed. It can compress in real-time for more than 1 Gigapixel per second per camera. It has been designed to run on FPGAs implemented by Gidel in their high-performance frame grabbers or FantoVision embedded computers. System engineers can also design their own image acquisition device using Gidel’s FPGA modules.

The ability to perform the compression with limited processing power and power consumption means that compression can take place at the earliest image acquisition stage, before recording or image processing. This offloads tasks from the GPU or CPU of the system, avoids bottlenecks between the frame grabber and the host processor and reduces the amount of data that must be processed, stored or uploaded to the cloud in the case of an edge computing application. The Quality+ compression takes low amount of FPGA resources. That enables Gidel’s InfiniVision software to grab and compress ten cameras and more running at 1 Gigapixel per second can be handled by Gidel’s FPGA platform in real time. This opens unprecedented possibilities to design high-speed/high-resolution vision applications in a small size, weight and power consumption (SWaP).

To meet the demands of today’s high-resolution and high-speed imaging, smart compressions algorithms are required that deliver a high compression ration while maintaining the required image quality. Each application has its own definition of what image quality is. Therefore, the optimal balance between compression ratio and image quality can only be achieved with a differentiated approach and a customizable compression algorithm.

(This article was published in German in Inspect magazine)